Kashyap Chitta

I am a Postdoctoral Researcher at the NVIDIA Autonomous Vehicle Research Group working from Tübingen, Germany. My research focuses on simulation-based training and evaluation of Physical AI systems. Representative papers are highlighted below.

News: The Physical AI Autonomous Vehicles Dataset is now available! I am also co-organizing the CVPR 2026 Workshop on Simulation for Autonomous Driving. We look forward to contributions of both original work as well as papers previously submitted/published elsewhere!

Bio: Kashyap did a bachelor's degree in electronics at the RV College of Engineering, India. He then moved to the US in 2017 to obtain his Master's degree in computer vision from Carnegie Mellon University, where he was advised by Prof. Martial Hebert. During this time, he was also an intern at the NVIDIA Autonomous Vehicles Applied Research Group working with Dr. Jose M. Alvarez. From 2019, he was a PhD student in the Autonomous Vision Group at the University of Tübingen, Germany, supervised by Prof. Andreas Geiger. He was selected for the doctoral consortium at ICCV 2023, as a 2023 RSS pioneer, and an outstanding reviewer for CVPR, ICCV, ECCV, and NeurIPS. He has also won multiple autonomous driving challenge awards [nuPlan 2023] [CARLA 2020, 2021, 2022, 2023, 2024] [Waymo 2025] [HUGSIM 2025].

Publications

Long Nguyen, Micha Fauth, Bernhard Jaeger, Daniel Dauner, Maximilian Igl, Andreas Geiger, Kashyap Chitta

Conference on Computer Vision and Pattern Recognition (CVPR), 2026

Abs / Paper / Supplementary / Poster / Code /

@inproceedings{Nguyen2026CVPR,

author = {Long Nguyen and Micha Fauth and Bernhard Jaeger and Daniel Dauner and Maximilian Igl and Andreas Geiger and Kashyap Chitta},

title = {LEAD: Minimizing Learner-Expert Asymmetry in End-to-End Driving},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2026},

}

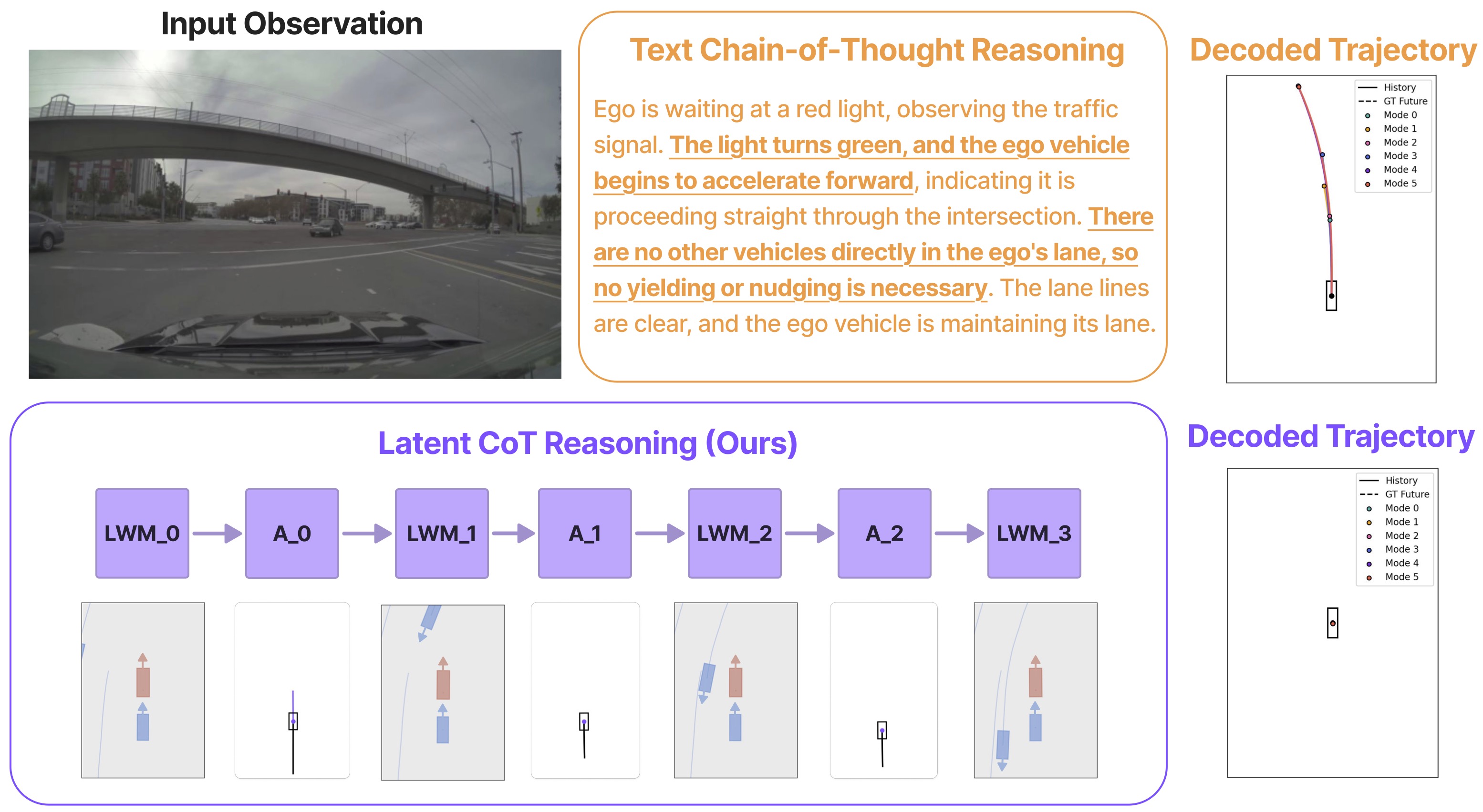

Shuhan Tan, Kashyap Chitta, Yuxiao Chen, Ran Tian, Yurong You, Yan Wang, Wenjie Luo, Yulong Cao, Philipp Krahenbuhl, Marco Pavone, Boris Ivanovic

Conference on Computer Vision and Pattern Recognition (CVPR), 2026

Abs / Paper /

@inproceedings{Tan2026CVPR,

author = {Shuhan Tan and Kashyap Chitta and Yuxiao Chen and Ran Tian and Yurong You and Yan Wang and Wenjie Luo and Yulong Cao and Philipp Krahenbuhl and Marco Pavone and Boris Ivanovic},

title = {Latent Chain-of-Thought World Modeling for End-to-End Driving},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2026},

}

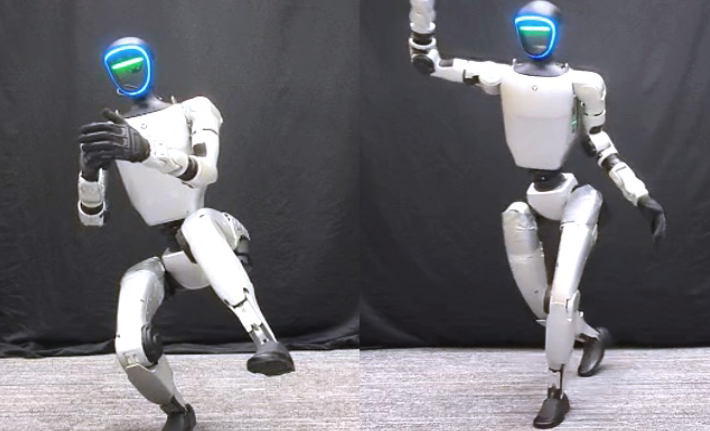

Yixuan Pan*, Ruoyi Qiao*, Li Chen, Kashyap Chitta, Liang Pan, Haoguang Mai, Qingwen Bu, Hao Zhao, Cunyuan Zheng, Ping Luo, Hongyang Li

International Conference on Robotics and Automation (ICRA), 2026

Abs / Paper / Video / Code /

@inproceedings{Pan2026ICRA,

author = {Yixuan Pan and Ruoyi Qiao and Li Chen and Kashyap Chitta and Liang Pan and Haoguang Mai and Qingwen Bu and Hao Zhao and Cunyuan Zheng and Ping Luo and Hongyang Li},

title = {Agility Meets Stability: Versatile Humanoid Control with Heterogeneous Data},

booktitle = {International Conference on Robotics and Automation (ICRA)},

year = {2026},

}

Jiazhi Yang, Kashyap Chitta, Shenyuan Gao, Long Chen, Yuqian Shao, Xiaosong Jia, Hongyang Li, Andreas Geiger, Xiangyu Yue, Li Chen

Advances in Neural Information Processing Systems (NeurIPS), 2025

Abs / Paper / Code /

@inproceedings{Yang2025NEURIPS,

author = {Jiazhi Yang and Kashyap Chitta and Shenyuan Gao and Long Chen and Yuqian Shao and Xiaosong Jia and Hongyang Li and Andreas Geiger and Xiangyu Yue and Li Chen},

title = {ReSim: Reliable World Simulation for Autonomous Driving},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2025},

}

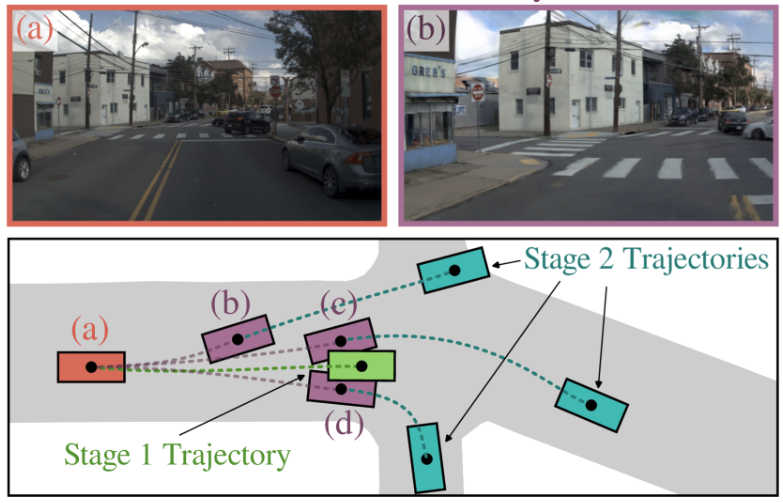

Wei Cao*, Marcel Hallgarten*, Tianyu Li*, Daniel Dauner, Xunjiang Gu, Caojun Wang, Yakov Miron, Marco Aiello, Hongyang Li, Igor Gilitschenski, Boris Ivanovic, Marco Pavone, Andreas Geiger, Kashyap Chitta

Conference on Robot Learning (CoRL), 2025

Abs / Paper / Supplementary / Code /

@inproceedings{Cao2025CORL,

author = {Wei Cao and Marcel Hallgarten and Tianyu Li and Daniel Dauner and Xunjiang Gu and Caojun Wang and Yakov Miron and Marco Aiello and Hongyang Li and Igor Gilitschenski and Boris Ivanovic and Marco Pavone and Andreas Geiger and Kashyap Chitta},

title = {Pseudo-Simulation for Autonomous Driving},

booktitle = {Conference on Robot Learning (CoRL)},

year = {2025},

}

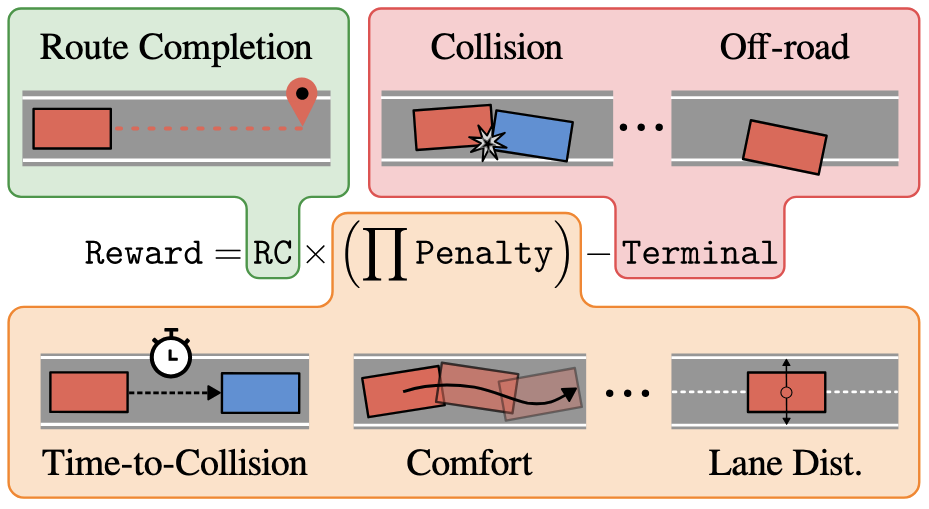

Bernhard Jaeger, Daniel Dauner, Jens Beißwenger, Simon Gerstenecker, Kashyap Chitta, Andreas Geiger

Conference on Robot Learning (CoRL), 2025

Abs / Paper / Video / Code /

@inproceedings{Jaeger2025CORL,

author = {Bernhard Jaeger and Daniel Dauner and Jens Beißwenger and Simon Gerstenecker and Kashyap Chitta and Andreas Geiger},

title = {CaRL: Learning Scalable Planning Policies with Simple Rewards},

booktitle = {Conference on Robot Learning (CoRL)},

year = {2025},

}

Shenyuan Gao, Jiazhi Yang, Li Chen, Kashyap Chitta, Yihang Qiu, Andreas Geiger, Jun Zhang, Hongyang Li

Advances in Neural Information Processing Systems (NeurIPS), 2024

Abs / Paper / Video / Code /

@inproceedings{Gao2024NEURIPS,

author = {Shenyuan Gao and Jiazhi Yang and Li Chen and Kashyap Chitta and Yihang Qiu and Andreas Geiger and Jun Zhang and Hongyang Li},

title = {Vista: A Generalizable Driving World Model with High Fidelity and Versatile Controllability},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2024},

}

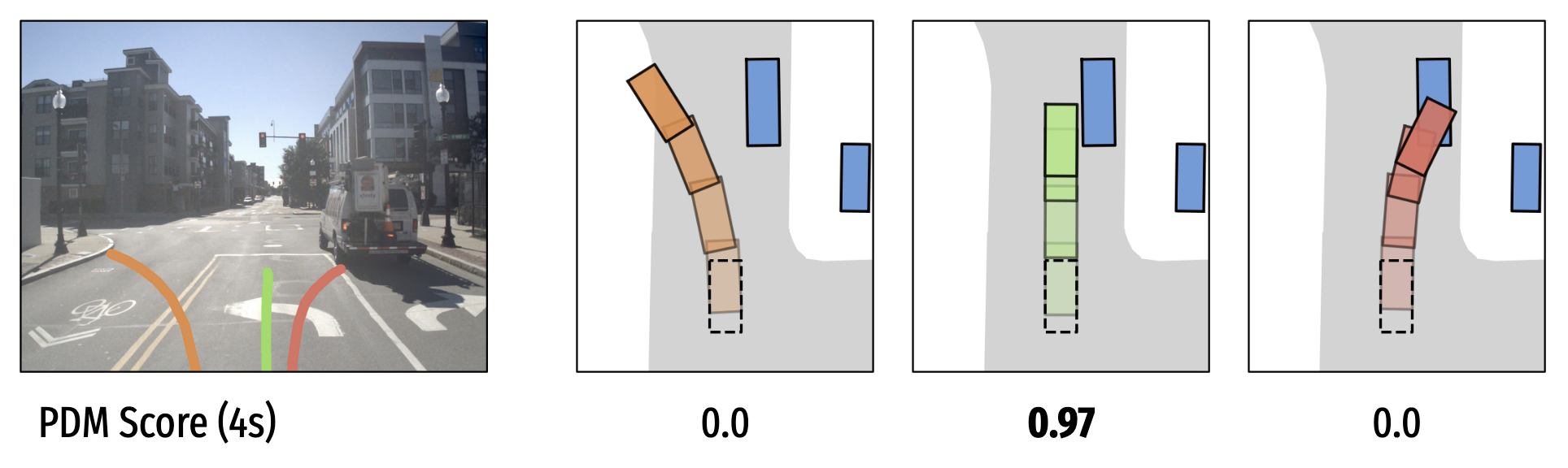

Daniel Dauner, Marcel Hallgarten, Tianyu Li, Xinshuo Weng, Zhiyu Huang, Zetong Yang, Hongyang Li, Igor Gilitschenski, Boris Ivanovic, Marco Pavone, Andreas Geiger, Kashyap Chitta

Advances in Neural Information Processing Systems (NeurIPS), 2024

Abs / Paper / Supplementary / Video / Code /

@inproceedings{Dauner2024NEURIPS,

author = {Daniel Dauner and Marcel Hallgarten and Tianyu Li and Xinshuo Weng and Zhiyu Huang and Zetong Yang and Hongyang Li and Igor Gilitschenski and Boris Ivanovic and Marco Pavone and Andreas Geiger and Kashyap Chitta},

title = {NAVSIM: Data-Driven Non-Reactive Autonomous Vehicle Simulation and Benchmarking},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2024},

}

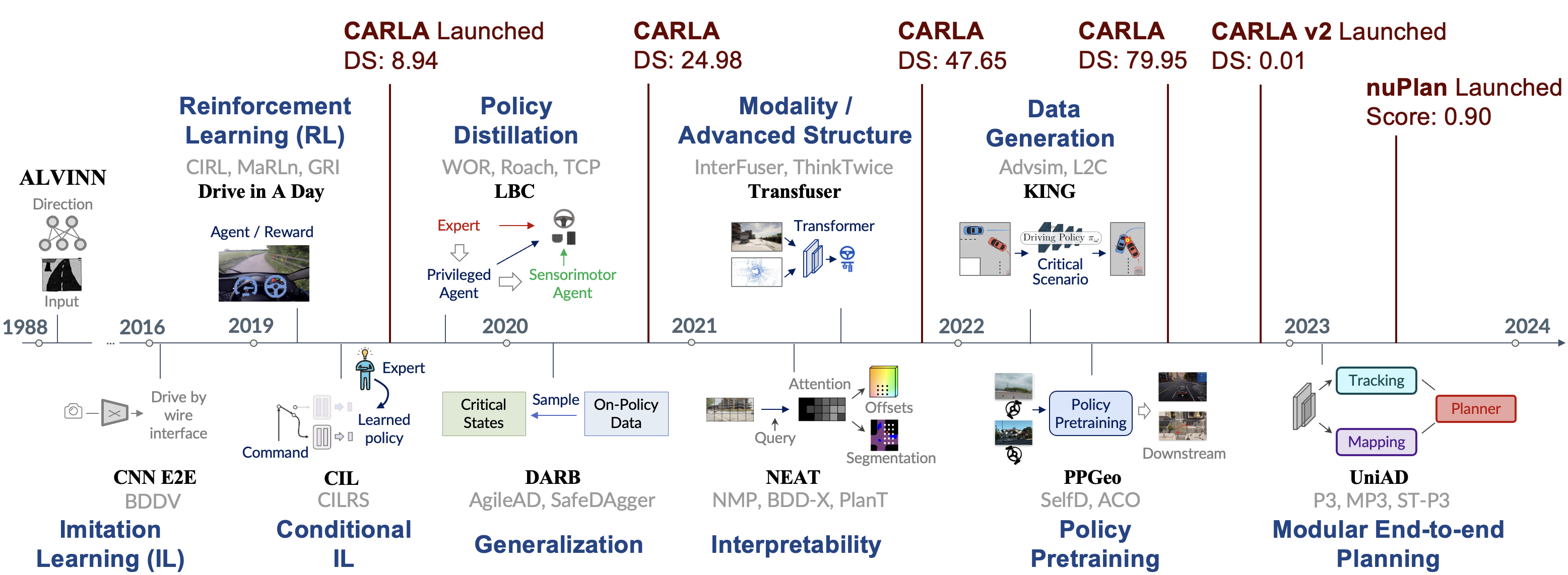

Li Chen, Penghao Wu, Kashyap Chitta, Bernhard Jaeger, Andreas Geiger, Hongyang Li

Transactions on Pattern Analysis and Machine Intelligence (T-PAMI), 2024

Abs / Paper / Code /

@article{Chen2024PAMI,

author = {Li Chen and Penghao Wu and Kashyap Chitta and Bernhard Jaeger and Andreas Geiger and Hongyang Li},

title = {End-to-end Autonomous Driving: Challenges and Frontiers},

booktitle = {Transactions on Pattern Analysis and Machine Intelligence (T-PAMI)},

year = {2024},

}

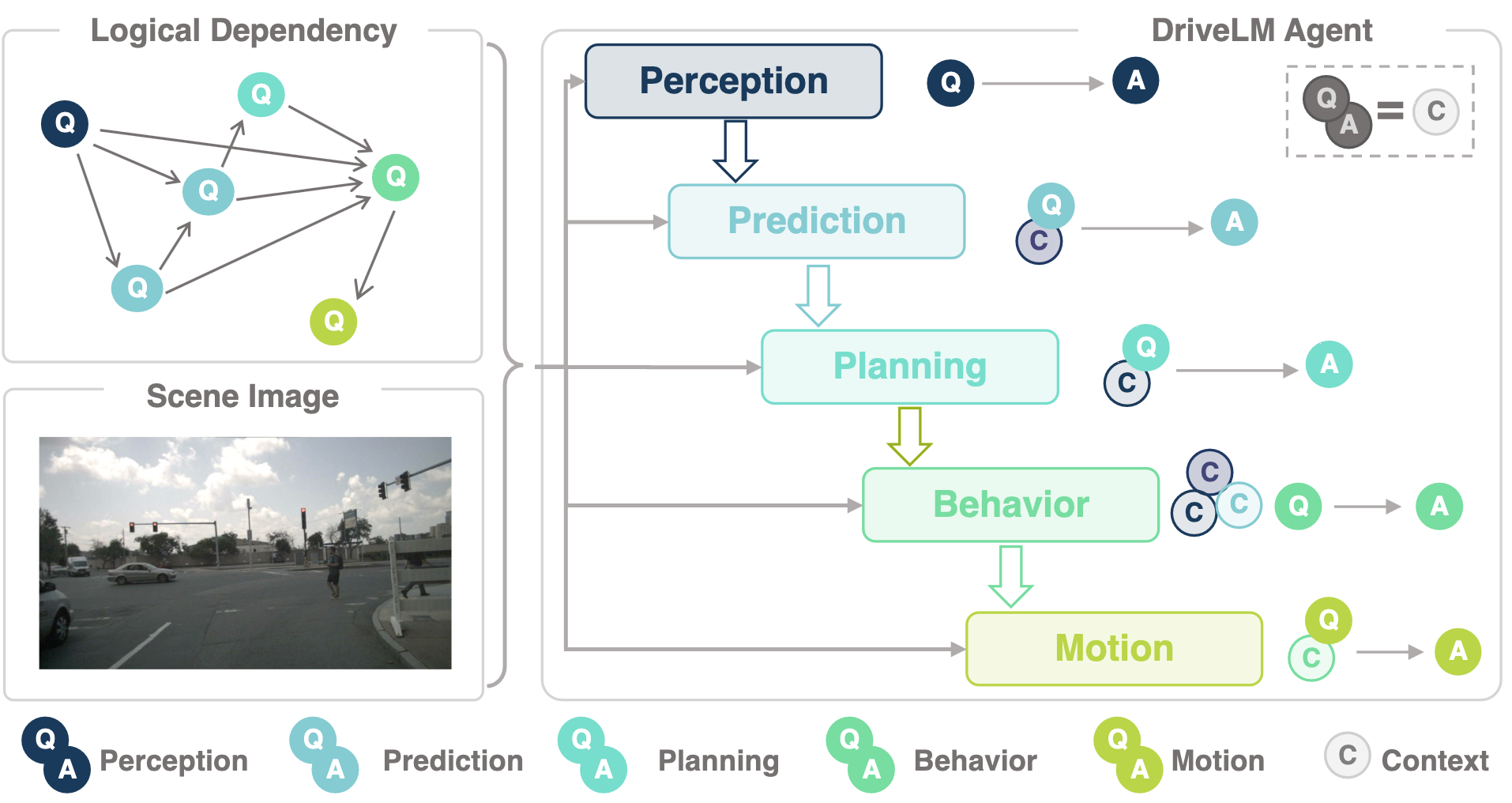

Chonghao Sima*, Katrin Renz*, Kashyap Chitta, Li Chen, Hanxue Zhang, Chengen Xie, Jens Beißwenger, Ping Luo, Andreas Geiger, Hongyang Li

European Conference on Computer Vision (ECCV), 2024

Abs / Paper / Code /

@inproceedings{Sima2024ECCV,

author = {Chonghao Sima and Katrin Renz and Kashyap Chitta and Li Chen and Hanxue Zhang and Chengen Xie and Jens Beißwenger and Ping Luo and Andreas Geiger and Hongyang Li},

title = {DriveLM: Driving with Graph Visual Question Answering},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2024},

}

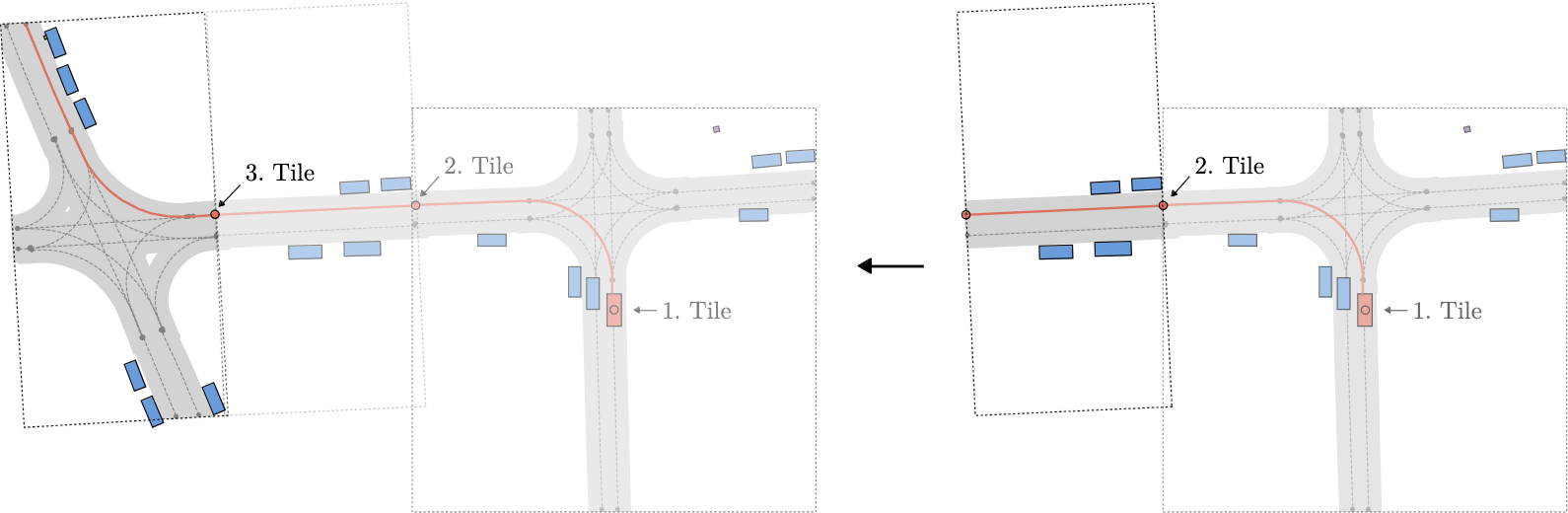

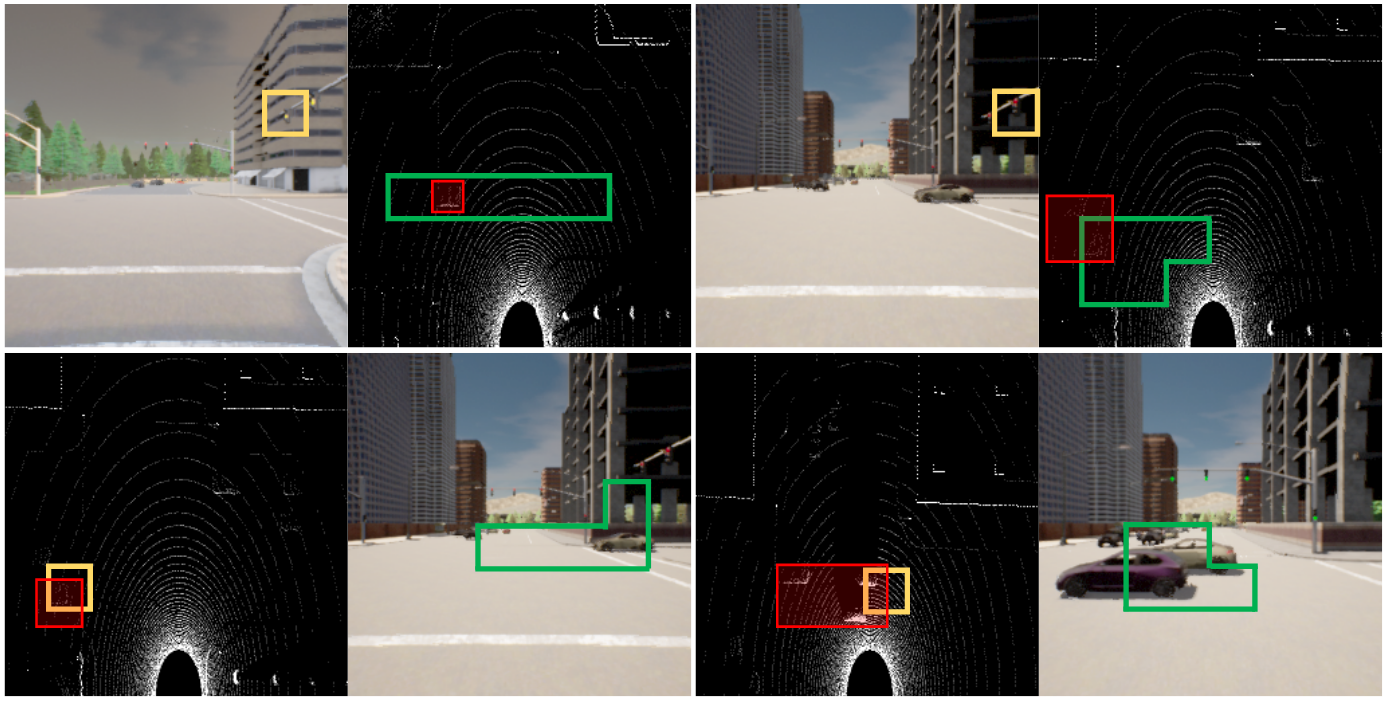

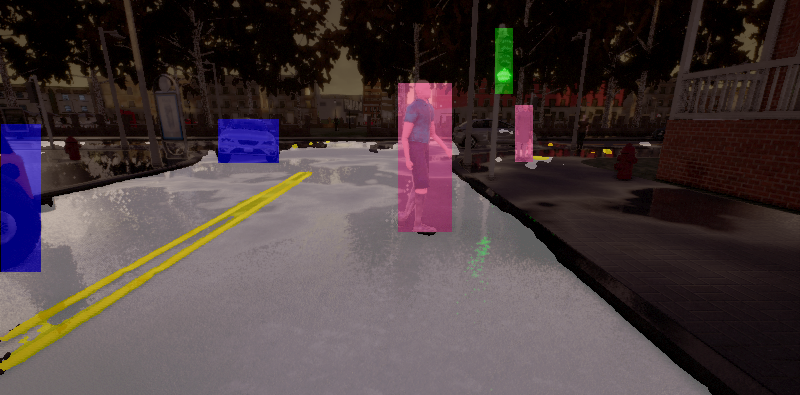

Kashyap Chitta*, Daniel Dauner*, Andreas Geiger

European Conference on Computer Vision (ECCV), 2024

Abs / Paper / Supplementary / Video / Code /

@inproceedings{Chitta2024ECCV,

author = {Kashyap Chitta and Daniel Dauner and Andreas Geiger},

title = {SLEDGE: Synthesizing Driving Environments with Generative Models and Rule-Based Traffic},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2024},

}

Jiazhi Yang*, Shenyuan Gao*, Yihang Qiu*, Li Chen, Tianyu Li, Bo Dai, Kashyap Chitta, Penghao Wu, Jia Zeng, Ping Luo, Jun Zhang, Andreas Geiger, Yu Qiao, Hongyang Li

Conference on Computer Vision and Pattern Recognition (CVPR), 2024

Abs / Paper / Code /

@inproceedings{Yang2024CVPR,

author = {Jiazhi Yang and Shenyuan Gao and Yihang Qiu and Li Chen and Tianyu Li and Bo Dai and Kashyap Chitta and Penghao Wu and Jia Zeng and Ping Luo and Jun Zhang and Andreas Geiger and Yu Qiao and Hongyang Li},

title = {Generalized Predictive Model for Autonomous Driving},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2024},

}

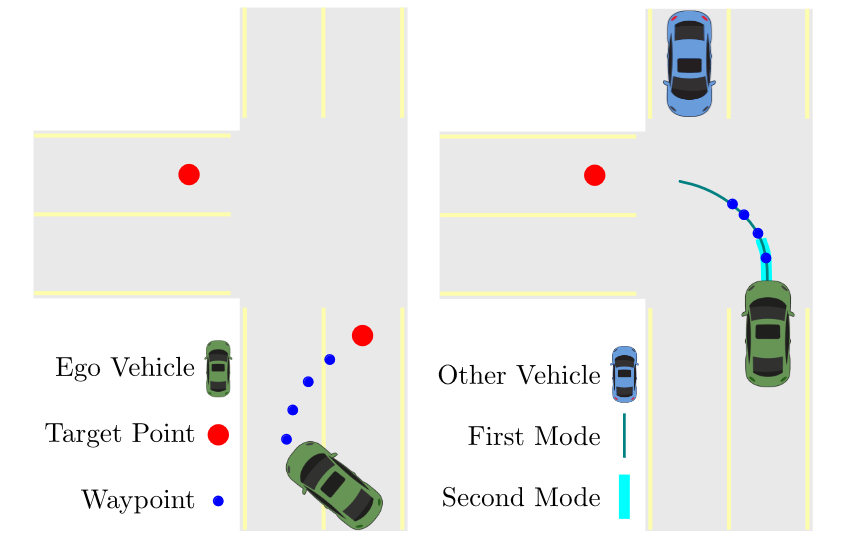

Daniel Dauner, Marcel Hallgarten, Andreas Geiger, Kashyap Chitta

Conference on Robot Learning (CoRL), 2023

Abs / Paper / Supplementary / Video / Poster / Code /

@inproceedings{Dauner2023CORL,

author = {Daniel Dauner and Marcel Hallgarten and Andreas Geiger and Kashyap Chitta},

title = {Parting with Misconceptions about Learning-based Vehicle Motion Planning},

booktitle = {Conference on Robot Learning (CoRL)},

year = {2023},

}

Bernhard Jaeger, Kashyap Chitta, Andreas Geiger

International Conference on Computer Vision (ICCV), 2023

Abs / Paper / Video / Poster / Code /

@inproceedings{Jaeger2023ICCV,

author = {Bernhard Jaeger and Kashyap Chitta and Andreas Geiger},

title = {Hidden Biases of End-to-End Driving Models},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2023},

}

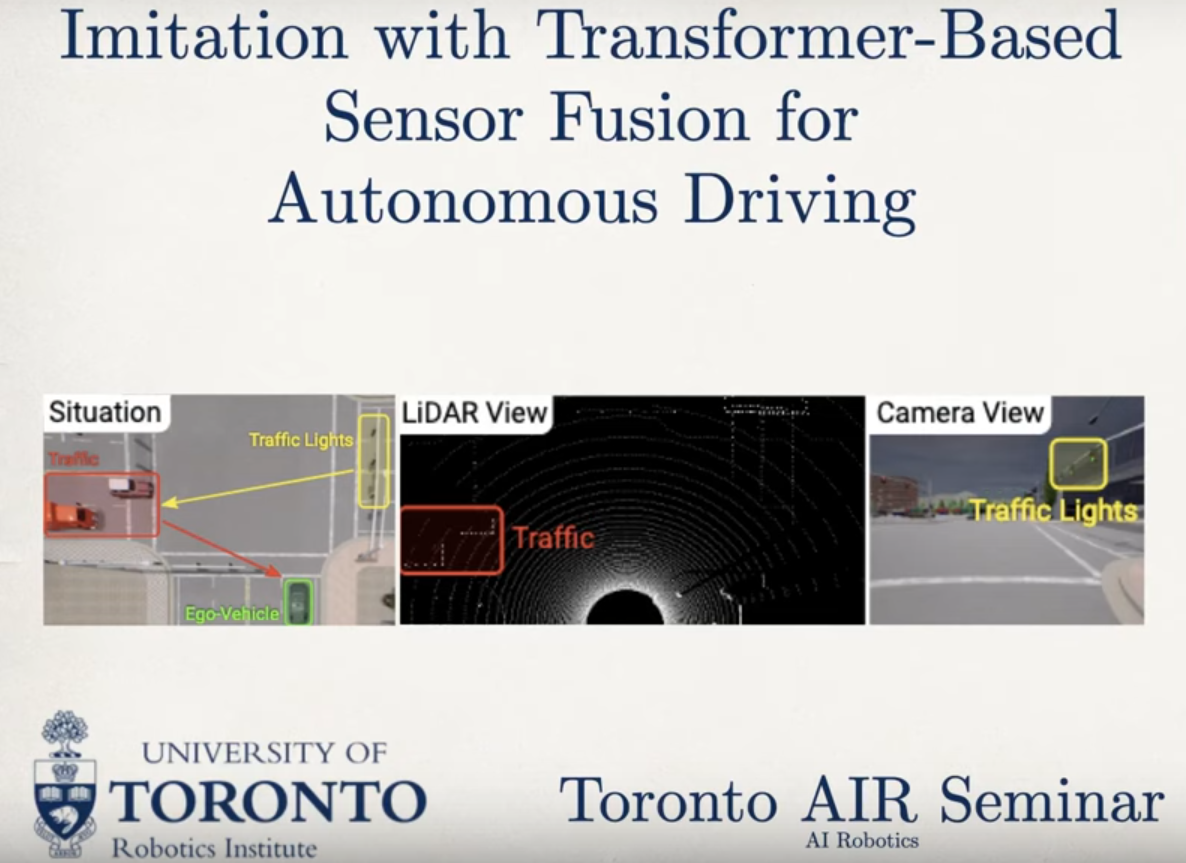

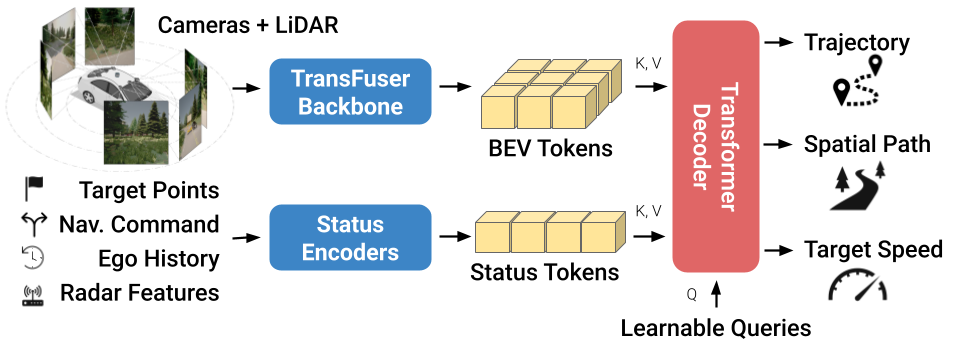

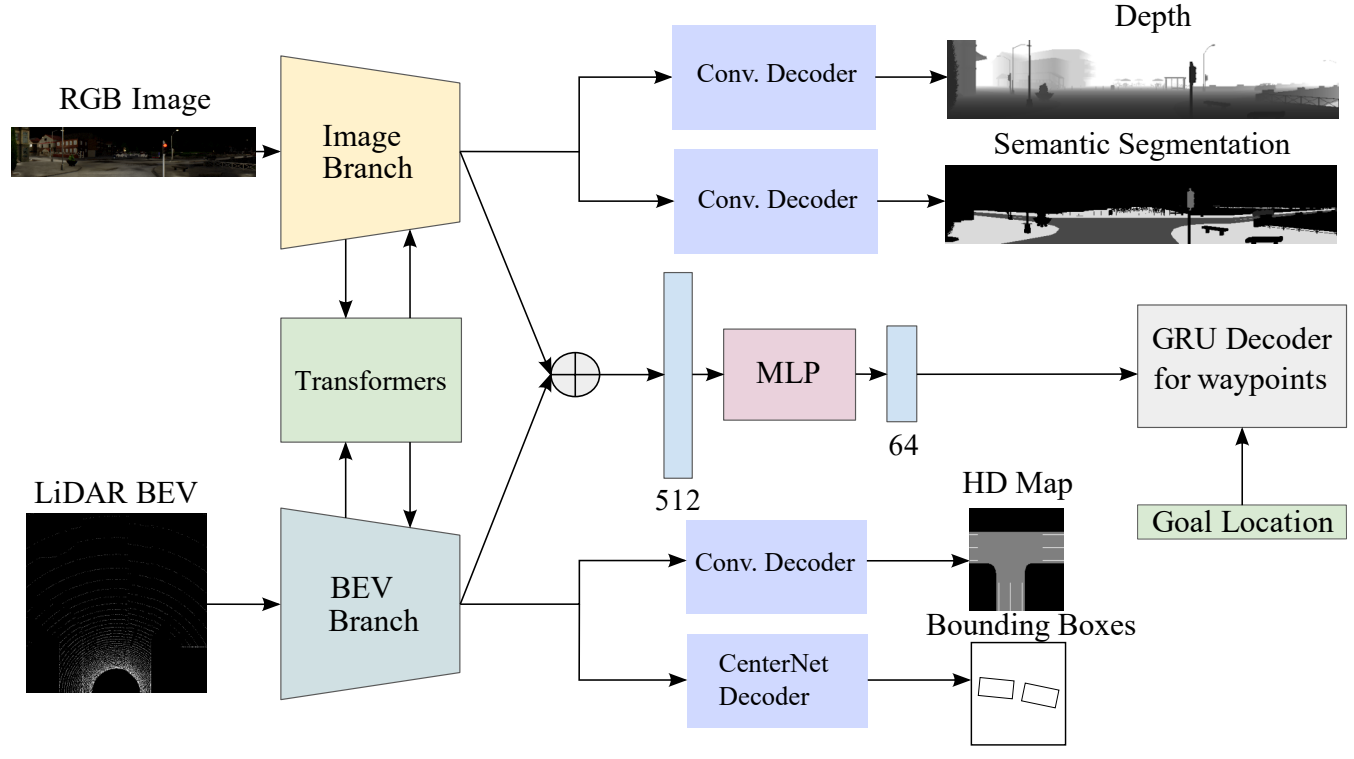

Kashyap Chitta, Aditya Prakash, Bernhard Jaeger, Zehao Yu, Katrin Renz, Andreas Geiger

Transactions on Pattern Analysis and Machine Intelligence (T-PAMI), 2023

Abs / Paper / Supplementary / Video / Poster / Code /

@article{Chitta2023PAMI,

author = {Kashyap Chitta and Aditya Prakash and Bernhard Jaeger and Zehao Yu and Katrin Renz and Andreas Geiger},

title = {TransFuser: Imitation with Transformer-Based Sensor Fusion for Autonomous Driving},

booktitle = {Transactions on Pattern Analysis and Machine Intelligence (T-PAMI)},

year = {2023},

}

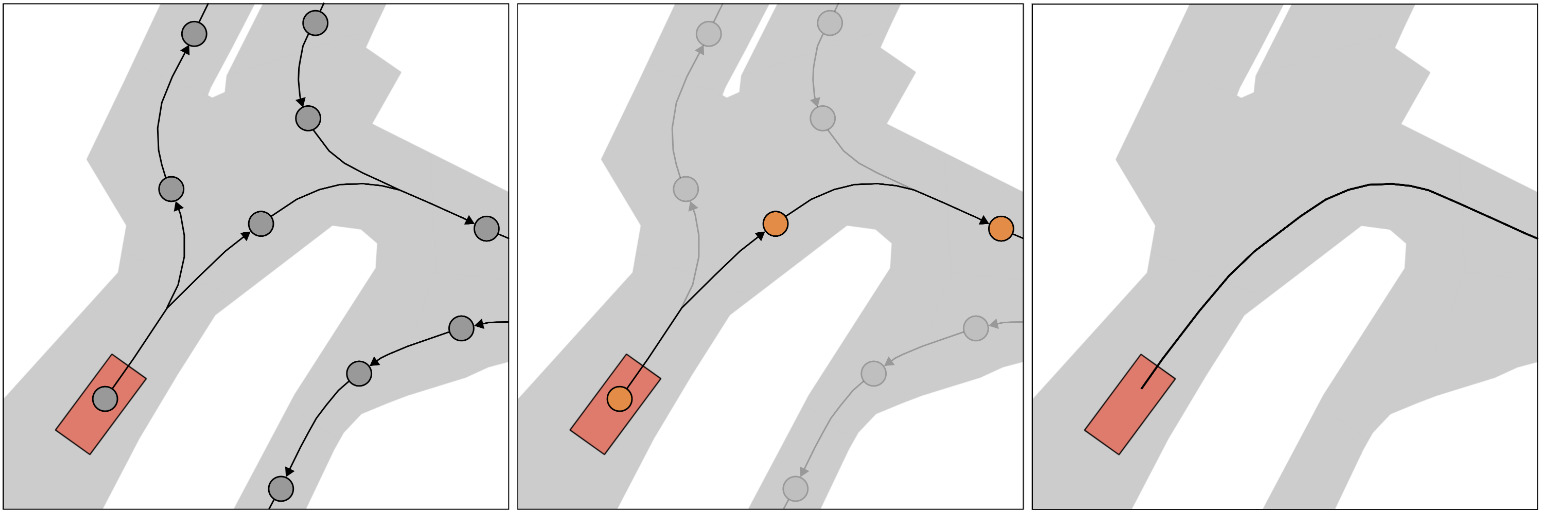

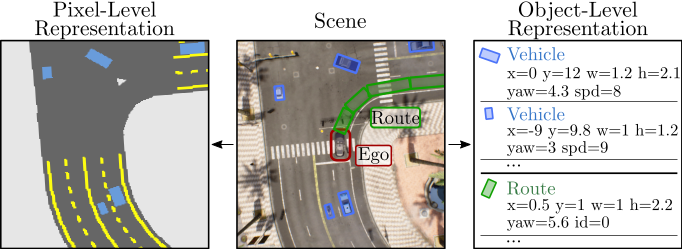

Katrin Renz, Kashyap Chitta, Otniel-Bogdan Mercea, Sophia Koepke, Zeynep Akata, Andreas Geiger

Conference on Robot Learning (CoRL), 2022

Abs / Paper / Supplementary / Video / Poster / Code /

@inproceedings{Renz2022CORL,

author = {Katrin Renz and Kashyap Chitta and Otniel-Bogdan Mercea and Sophia Koepke and Zeynep Akata and Andreas Geiger},

title = {PlanT: Explainable Planning Transformers via Object-Level Representations},

booktitle = {Conference on Robot Learning (CoRL)},

year = {2022},

}

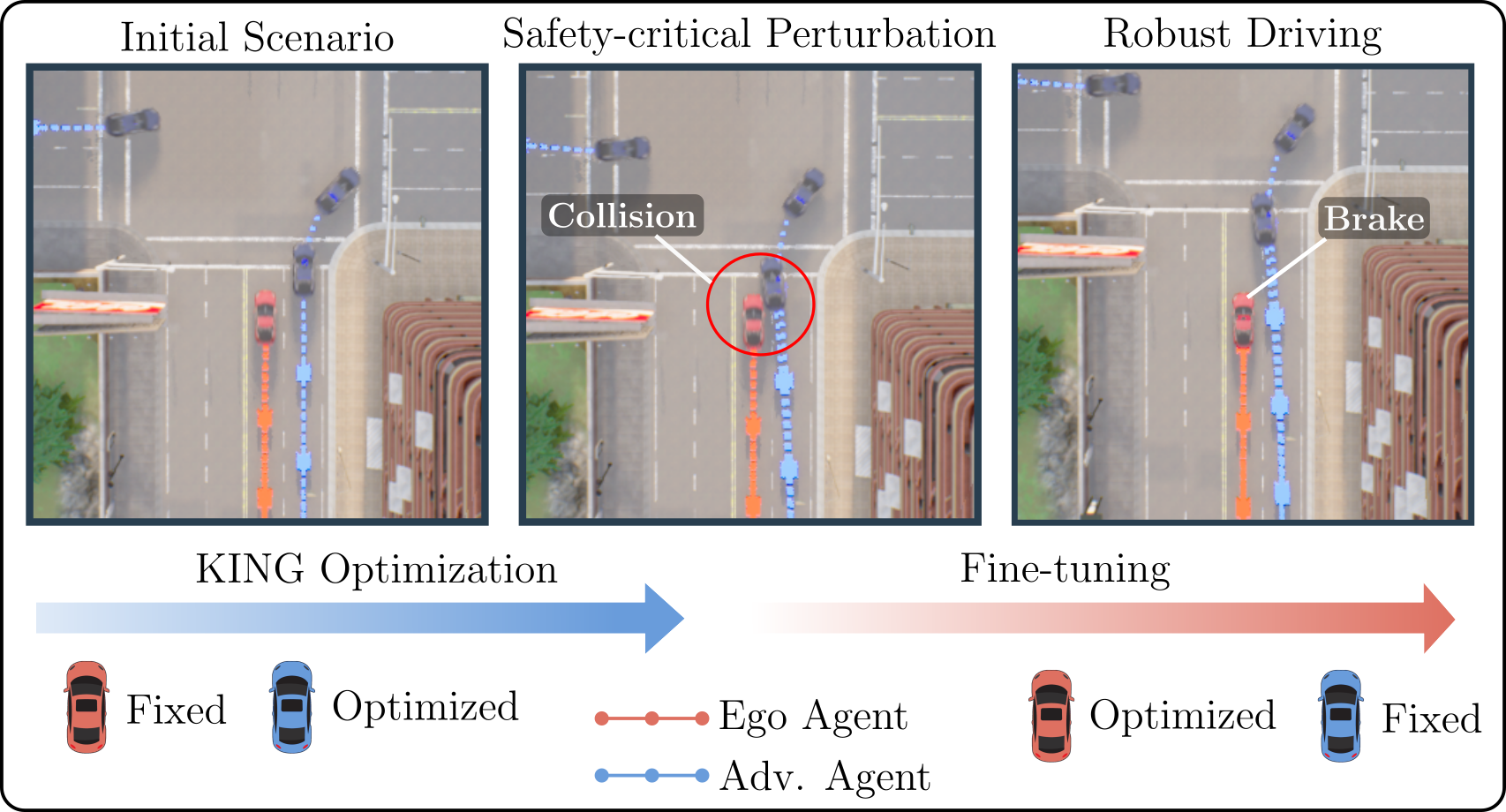

Niklas Hanselmann, Katrin Renz, Kashyap Chitta, Apratim Bhattacharyya, Andreas Geiger

European Conference on Computer Vision (ECCV), 2022

Abs / Paper / Supplementary / Video / Poster / Code /

@inproceedings{Hanselmann2022ECCV,

author = {Niklas Hanselmann and Katrin Renz and Kashyap Chitta and Apratim Bhattacharyya and Andreas Geiger},

title = {KING: Generating Safety-Critical Driving Scenarios for Robust Imitation via Kinematics Gradients},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2022},

}

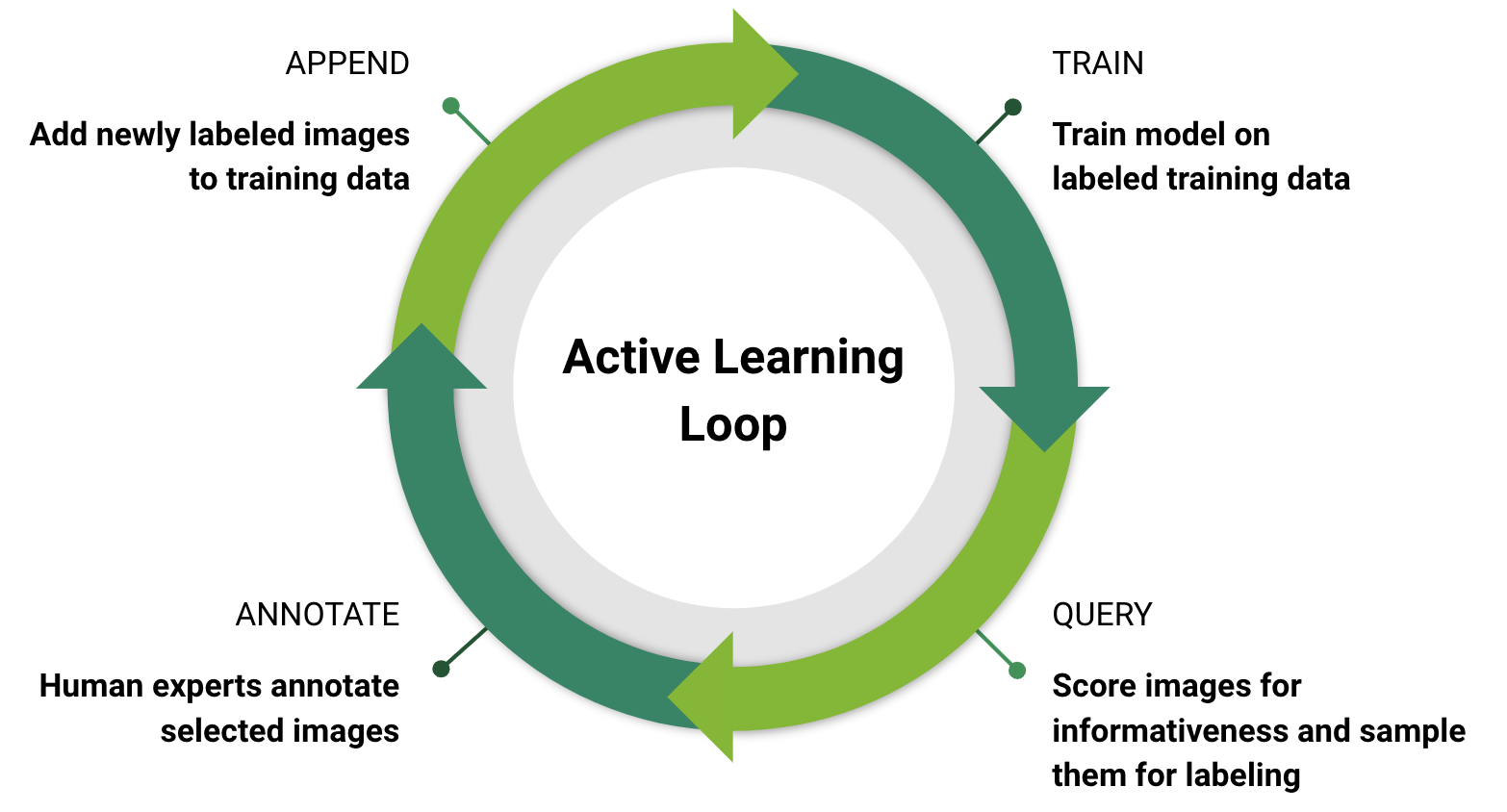

Kashyap Chitta, Jose Alvarez, Elmar Haussmann, Clement Farabet

Transactions on Intelligent Transportation Systems (T-ITS), 2021

Abs / Paper /

@article{Chitta2021ITS,

author = {Kashyap Chitta and Jose Alvarez and Elmar Haussmann and Clement Farabet},

title = {Training Data Subset Search with Ensemble Active Learning},

booktitle = {Transactions on Intelligent Transportation Systems (T-ITS)},

year = {2021},

}

Axel Sauer, Kashyap Chitta, Jens Muller, Andreas Geiger

Advances in Neural Information Processing Systems (NeurIPS), 2021

Abs / Paper / Supplementary / Video / Poster / Code /

@inproceedings{Sauer2021NEURIPS,

author = {Axel Sauer and Kashyap Chitta and Jens Muller and Andreas Geiger},

title = {Projected GANs Converge Faster},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2021},

}

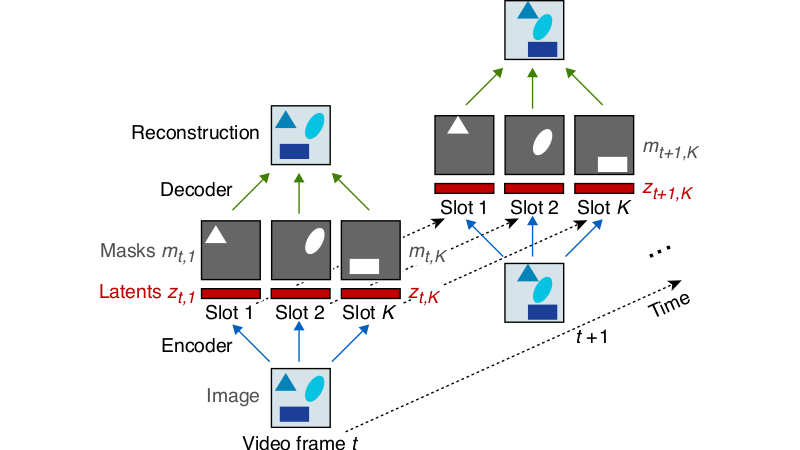

Marissa Weis, Kashyap Chitta, Yash Sharma, Wieland Brendel, Matthias Bethge, Andreas Geiger, Alexander Ecker

Journal of Machine Learning Research (JMLR), 2021

Abs / Paper / Video / Code /

@article{Weis2021JMLR,

author = {Marissa Weis and Kashyap Chitta and Yash Sharma and Wieland Brendel and Matthias Bethge and Andreas Geiger and Alexander Ecker},

title = {Benchmarking Unsupervised Object Representations for Video Sequences},

booktitle = {Journal of Machine Learning Research (JMLR)},

year = {2021},

}

Kashyap Chitta*, Aditya Prakash*, Andreas Geiger

International Conference on Computer Vision (ICCV), 2021

Abs / Paper / Supplementary / Video / Poster / Code /

@inproceedings{Chitta2021ICCV,

author = {Kashyap Chitta and Aditya Prakash and Andreas Geiger},

title = {NEAT: Neural Attention Fields for End-to-End Autonomous Driving},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2021},

}

Aditya Prakash*, Kashyap Chitta*, Andreas Geiger

Conference on Computer Vision and Pattern Recognition (CVPR), 2021

Abs / Paper / Supplementary / Video / Poster / Code /

@inproceedings{Prakash2021CVPR,

author = {Aditya Prakash and Kashyap Chitta and Andreas Geiger},

title = {Multi-Modal Fusion Transformer for End-to-End Autonomous Driving},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2021},

}

Aseem Behl*, Kashyap Chitta*, Aditya Prakash, Eshed Ohn-Bar, Andreas Geiger

International Conference on Intelligent Robots and Systems (IROS), 2020

Abs / Paper / Video /

@inproceedings{Behl2020IROS,

author = {Aseem Behl and Kashyap Chitta and Aditya Prakash and Eshed Ohn-Bar and Andreas Geiger},

title = {Label Efficient Visual Abstractions for Autonomous Driving},

booktitle = {International Conference on Intelligent Robots and Systems (IROS)},

year = {2020},

}

Elmar Haussmann, Michele Fenzi, Kashyap Chitta, Jan Ivanecky, Hanson Xu, Donna Roy, Akshita Mittel, Nicolas Koumchatzky, Clement Farabet, Jose Alvarez

Intelligent Vehicles Symposium (IV), 2020

Abs / Paper /

@inproceedings{Haussmann2020IV,

author = {Elmar Haussmann and Michele Fenzi and Kashyap Chitta and Jan Ivanecky and Hanson Xu and Donna Roy and Akshita Mittel and Nicolas Koumchatzky and Clement Farabet and Jose Alvarez},

title = {Scalable Active Learning for Object Detection},

booktitle = {Intelligent Vehicles Symposium (IV)},

year = {2020},

}

Aditya Prakash, Aseem Behl, Eshed Ohn-Bar, Kashyap Chitta, Andreas Geiger

Conference on Computer Vision and Pattern Recognition (CVPR), 2020

Abs / Paper / Supplementary / Video / Code /

@inproceedings{Prakash2020CVPR,

author = {Aditya Prakash and Aseem Behl and Eshed Ohn-Bar and Kashyap Chitta and Andreas Geiger},

title = {Exploring Data Aggregation in Policy Learning for Vision-Based Urban Autonomous Driving},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2020},

}

Eshed Ohn-Bar, Aditya Prakash, Aseem Behl, Kashyap Chitta, Andreas Geiger

Conference on Computer Vision and Pattern Recognition (CVPR), 2020

Abs / Paper / Supplementary / Video /

@inproceedings{Ohn-bar2020CVPR,

author = {Eshed Ohn-Bar and Aditya Prakash and Aseem Behl and Kashyap Chitta and Andreas Geiger},

title = {Learning Situational Driving},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2020},

}

Kashyap Chitta, Jose Alvarez, Martial Hebert

Winter Conference on Applications of Computer Vision (WACV), 2020

Abs / Paper / Poster / Code /

@inproceedings{Chitta2020WACV,

author = {Kashyap Chitta and Jose Alvarez and Martial Hebert},

title = {Quadtree Generating Networks: Efficient Hierarchical Scene Parsing with Sparse Convolutions},

booktitle = {Winter Conference on Applications of Computer Vision (WACV)},

year = {2020},

}Selected Talks

This website is based on the lightweight and easy-to-use template from Michael Niemeyer. Check out his github repository for instructions on how to use it!